Everybody is worked up in regards to the potential of enormous language fashions (LLMs) to help with forecasting, analysis, and numerous day-to-day duties. Nevertheless, as their use expands into delicate areas like monetary prediction, critical issues are rising—notably round reminiscence leaks. Within the latest paper “The Memorization Downside: Can We Belief LLMs’ Financial Forecasts?”, the authors spotlight a key challenge: when LLMs are examined on historic knowledge inside their coaching window, their excessive accuracy might not mirror actual forecasting skill, however fairly memorization of previous outcomes. This undermines the reliability of backtests and creates a false sense of predictive energy.

Authors: Alejandro Lopez-Lira, Yuehua Tang, Mingyin Zhu

Title: The Memorization Downside: Can We Belief LLMs’ Financial Forecasts?

Hyperlink: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5217505

Summary:

Giant language fashions (LLMs) can’t be trusted for financial forecasts during times lined by their coaching knowledge. We offer the primary systematic analysis of LLMs’ memorization of financial and monetary knowledge, together with main financial indicators, information headlines, inventory returns, and convention calls. Our findings present that LLMs can completely recall the precise numerical values of key financial variables from earlier than their data cutoff dates. This recall seems to be randomly distributed throughout completely different dates and knowledge varieties. This selective excellent reminiscence creates a basic challenge—when testing forecasting capabilities earlier than their data cutoff dates, we can’t distinguish whether or not LLMs are forecasting or just accessing memorized knowledge. Express directions to respect historic knowledge boundaries fail to forestall LLMs from attaining recall-level accuracy in forecasting duties. Additional, LLMs appear distinctive at reconstructing masked entities from minimal contextual clues, suggesting that masking gives insufficient safety towards motivated reasoning. Our findings increase issues about utilizing LLMs to forecast historic knowledge or backtest buying and selling methods, as their obvious predictive success might merely mirror memorization fairly than real financial perception. Any software the place future data would change LLMs’ outputs could be affected by memorization. In distinction, in line with the absence of knowledge contamination, LLMs can’t recall knowledge after their data cutoff date. Lastly, to deal with the memorization challenge, we suggest changing identifiable textual content into anonymized financial logic—an method that reveals robust potential for lowering memorization whereas sustaining the LLM’s forecasting efficiency.

As such, we current a number of attention-grabbing figures and tables:

Notable quotations from the educational analysis paper:

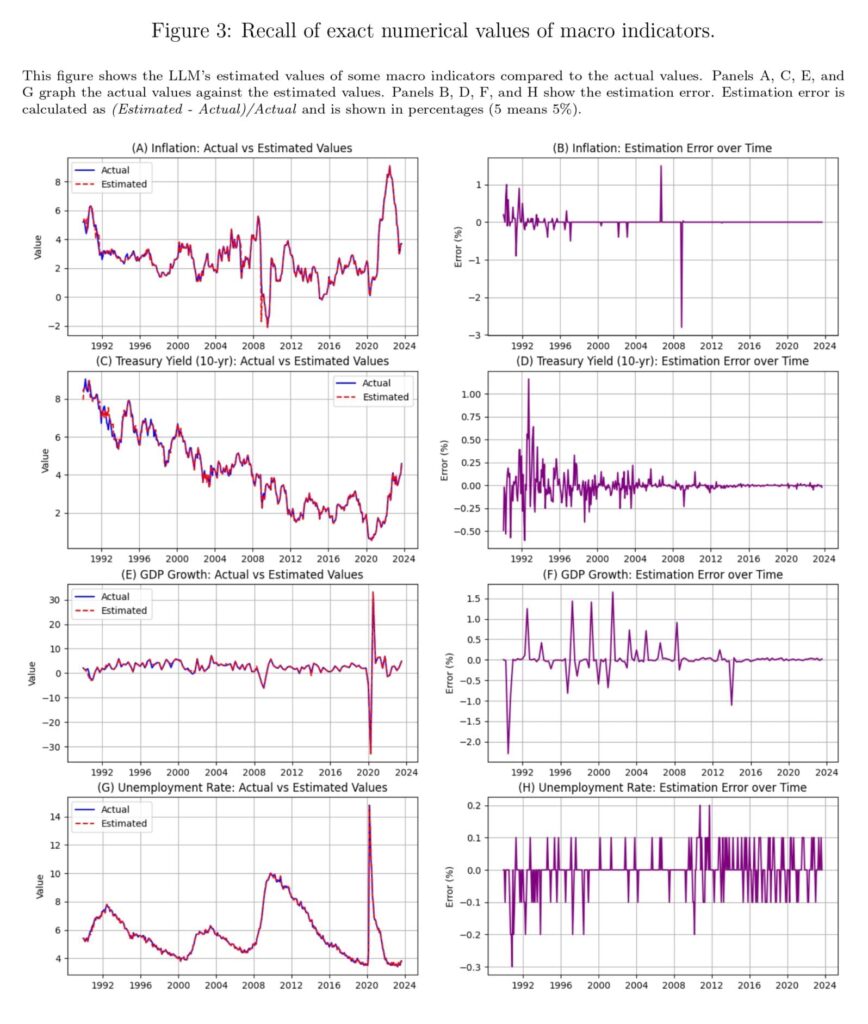

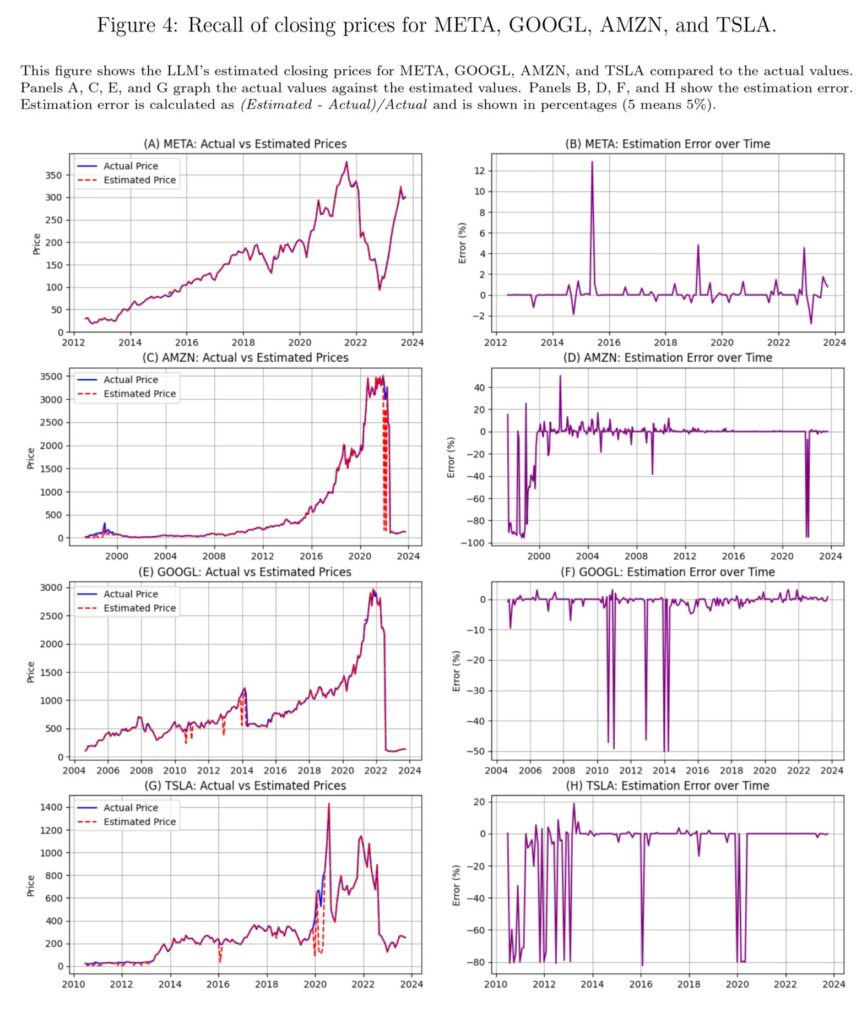

“Utilizing a novel testing framework, we present that LLMs can completely recall actual numerical values of financial knowledge from their coaching. Nevertheless, this recall varies seemingly randomly throughout completely different knowledge varieties and dates. For instance, earlier than its data cutoff date of October 2023, GPT-4o can recall particular S&P 500 index values with excellent precision on sure dates, unemployment charges correct to a tenth of a share level, and exact quarterly GDP figures. Determine 1 reveals the LLM’s memorized values of the inventory market indices in comparison with the precise values and the related errors. LLMs can reconstruct intently the general ups and downs of the inventory market indices, with some substantial occasional errors showing, seemingly at random.

The issue can manifest when LLMs are requested to investigate historic knowledge they’ve been uncovered to throughout coaching and instructed to not use their data. For instance, when prompted to forecast GDP development for This fall 2008 utilizing solely knowledge as much as Q3 2008, the mannequin can activate two parallel cognitive pathways: one which generates believable financial evaluation about elements like client spending and industrial manufacturing and one other that subtly accesses its memorized data of the particular GDP contraction through the monetary disaster. The ensuing forecast seems analytically sound but achieves suspiciously excessive accuracy as a result of it’s anchored to memorized outcomes fairly than derived from the offered data. This mechanism operates beneath the mannequin’s seen outputs, making it nearly not possible to detect by means of customary analysis strategies. The elemental drawback is analogous to asking an economist in 2025 to “predict” whether or not subprime mortgage defaults would set off a worldwide monetary disaster in 2008 whereas instructing them to “neglect” what occurred. Such directions are not possible to observe when the result is thought.

The outcomes reveal an evident skill to recall macroeconomic knowledge. For charges, the mannequin demonstrates near-perfect recall, with Imply Absolute Errors starting from 0.03% (Unemployment Charge) to 0.15% (GDP Progress) and Directional Accuracy exceeding 96% throughout all indicators, reaching 98% for 10-year Treasury Yield and 99% for Unemployment Charge. This end result means that GPT-4o has memorized these percentage-based indicators with excessive constancy.

We noticed an identical sample once we prolonged our take a look at to ask the mannequin to offer each the headline date and the corresponding S&P 500 degree on the subsequent buying and selling day. For the pre-training interval, the mannequin achieved excessive temporal accuracy whereas sustaining nearperfect recall of index values (imply absolute p.c error of simply 0.01%). For post-training headlines, each date identification and index degree predictions turned considerably much less correct.

These outcomes connect with our earlier findings on macroeconomic indicators, the place excessive pre-cutoff accuracy mirrored memorization. The robust post-cutoff efficiency with out consumer immediate reinforcement mirrors the suspiciously excessive accuracy seen in different checks when constraints weren’t strictly enforced, suggesting that GPT-4o defaults to utilizing its full data until explicitly and repeatedly directed in any other case. The excessive refusal price with twin prompts aligns with weaker recall for much less distinguished knowledge, as seen in small-cap shares, indicating partial compliance however not full isolation from memorized data. This failure to totally respect cutoff directions reinforces the problem of utilizing LLMs for historic forecasting, as their outputs might subtly incorporate memorized knowledge, necessitating postcutoff evaluations to make sure real predictive skill.”

Are you in search of extra methods to examine? Join our e-newsletter or go to our Weblog or Screener.

Do you wish to be taught extra about Quantpedia Premium service? Test how Quantpedia works, our mission and Premium pricing provide.

Do you wish to be taught extra about Quantpedia Professional service? Test its description, watch movies, assessment reporting capabilities and go to our pricing provide.

Are you in search of historic knowledge or backtesting platforms? Test our record of Algo Buying and selling Reductions.

Would you want free entry to our companies? Then, open an account with Lightspeed and revel in one yr of Quantpedia Premium for gratis.

Or observe us on:

Fb Group, Fb Web page, Twitter, Linkedin, Medium or Youtube

Share onLinkedInTwitterFacebookCheck with a good friend